Case Study: Leveraging GenAI to rebuild the OpenXcom Engine

TL:DR

- Our findings show that AI-assisted development enabled a single engineer to rebuild an entire game engine in just three months, a task typically requiring a full team and around 20-24 man months.

- Achieved an average of 416% frame rate increase.

- Over 1,800 source files were created or modified during this development.

- AI streamlined Vulkan integration, UI scripting, and debugging, significantly reducing workload while maintaining code quality and allowing rapid iteration.

- By automating repetitive tasks and providing reference implementations, AI accelerated development and improved debugging efficiency.

- While AI had limitations, such as hallucinating incorrect solutions or reinforcing suboptimal design choices, its role as a force multiplier led to a structured and maintainable engine modernization.

- This case study proves that AI-assisted engineering is a scalable solution, empowering solo developers and small teams to complete large-scale projects faster and more efficiently.

Abstract

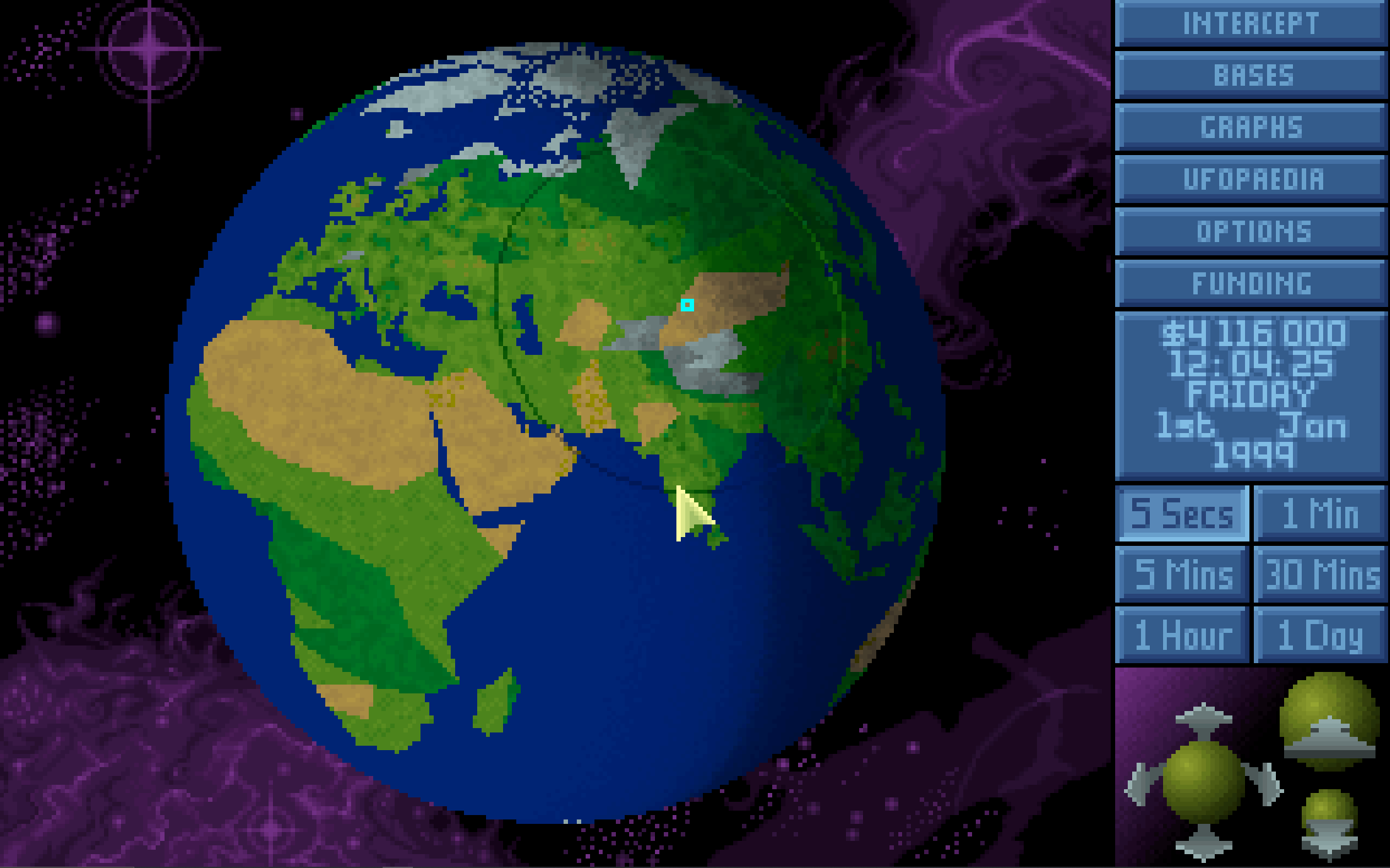

Rebuilding OpenXcom’s engine required overcoming complex technical challenges while maintaining the game’s classic 8-bit aesthetic. The initial goal was to integrate Lua for enhanced modding capabilities, but limitations in the existing engine quickly became apparent, prompting a full rewrite. Vulkan was chosen instead to future-proof the codebase for potential graphics engine upgrades. This decision allowed for a more flexible architecture that could support future graphical improvements beyond the immediate needs of the project.

The key question we sought to answer was how far a solo developer could go in building a complete game engine using generative AI and large language models (LLMs) as development assistants. With limited resources, AI-driven tools were leveraged to accelerate development, assisting in refactoring the game’s architecture, implementing Vulkan rendering, and optimizing performance. This case study explores how AI transformed a solo development effort, enabling rapid iteration, automation, and code assistance to deliver a functional game engine in just three months.

Our findings show that AI-assisted development enabled a single engineer to rebuild an entire game engine in just three months, a task typically requiring a full team. AI streamlined Vulkan integration, UI scripting, and debugging, significantly reducing workload while maintaining code quality and allowing rapid iteration. By automating repetitive tasks and providing reference implementations, AI accelerated development and improved debugging efficiency. While AI had limitations, such as hallucinating incorrect solutions or reinforcing suboptimal design choices, its role as a force multiplier led to a structured and maintainable engine modernization. This case study proves that AI-assisted engineering is a scalable solution, empowering solo developers and small teams to complete large-scale projects faster and more efficiently.

Introduction

The idea of rebuilding the X-COM engine stemmed from a desire to modernize OpenXcom while retaining its original charm. The goal was to enable Lua for enhanced modding capabilities, allowing for more flexible game logic and easier expansion by the community. While SDL 1.1 was outdated, it did not prevent Lua integration itself. Instead, its limitations became apparent when implementing the ‘Inspector’, a debugging tool designed to analyze and modify in-game elements in real-time. The existing SDL 1.1 framework lacked the necessary flexibility and rendering capabilities to support this tool effectively, making a complete rewrite of the graphics, audio, and input layers necessary to support both Lua and advanced debugging features.

Given the need for a rewrite, choosing the right graphics backend was crucial. While updating to SDL 3 was an option, Vulkan was selected instead to ensure long-term scalability and performance improvements. Vulkan provided better control over GPU resources, lower overhead, and the potential for multi-threaded rendering, which was a significant step forward from the older SDL-based approach. This choice future-proofed the engine, allowing for potential support of alternative rendering backends beyond Vulkan, such as DirectX or Metal, making the architecture more adaptable to future advancements.

AI Guys is a company specializing in AI-driven game development. This project was both a technical challenge and a proof of concept, demonstrating how AI can accelerate complex software development. OpenXcom, a fan-driven project that revitalized the original X-COM: UFO Defense, served as an excellent foundation but was built on older technologies. This case study details how AI accelerated the redevelopment process, allowing a single engineer to achieve what would typically require a team.

Background

The OpenXcom project was originally created as a fan-driven effort to modernize and expand upon the classic X-COM: UFO Defense, introducing new features, improved modding support, and cross-platform compatibility. However, the project had been built on aging technology, primarily utilizing SDL 1.1, which imposed several limitations on modern development workflows. The need for more flexible modding capabilities, performance improvements, and better debugging tools led to the decision to undertake a full-scale rewrite of the engine.

The primary goal was to integrate Lua scripting, allowing for greater control over game logic, UI elements, and procedural content generation. However, this integration was hindered by the rigid structure of the existing codebase and the outdated graphics, input, and audio systems. As a result, it became evident that a more comprehensive refactor was required to support modern development needs while preserving compatibility with legacy assets and mods.

At the same time, a shift to Vulkan was chosen over upgrading to SDL 3, not just for performance gains but to provide a long-term foundation for future graphics engine enhancements. Vulkan’s explicit control over GPU resources made it an ideal choice for improving rendering efficiency and reducing CPU overhead, particularly for a 2D game that relies on fast texture transfers rather than complex 3D rendering.

This project also served as a proof-of-concept for AI-assisted engineering. By leveraging generative AI tools, a single engineer was able to undertake the daunting task of rewriting an entire game engine, covering multiple disciplines that typically require a team of specialists. AI assistance was utilized in debugging, code refactoring, and problem-solving, providing a significant boost to development speed and efficiency. This case study highlights not only the technical achievements of the rewrite but also the potential of AI in enabling smaller teams to tackle ambitious game development challenges.

AI Tools Utilized

- ChatGPT – Used extensively for discussing technical choices, troubleshooting issues, and generating reference implementations. It was particularly effective in debugging error messages and handling context-aware code snippets.

- Claude 3 Sonnet – Served as another valuable tool for analyzing different technical approaches. It provided alternative solutions when encountering limitations and was useful for broadening the scope of possible implementations.

- GitHub Copilot – Integrated directly into Visual Studio, Copilot provided real-time code suggestions based on the existing project structure, naming conventions, and coding style. It was instrumental in writing entire classes and functions with minimal manual input.

- Cursor – Primarily used for file processing implementations. By simply adding function descriptions in comments, Cursor inferred and generated much of the required functionality. However, its lack of MSVC compiler integration presented some challenges, though this was a Microsoft limitation rather than a fault of Cursor itself.

- CodeLlama – A locally hosted alternative to Copilot, fine-tuned for C++. It provided high-quality code suggestions with better comments and stability. While it still required oversight, it often outperformed Copilot in generating well-structured code.

- DeepSeek Coder – Another model tested alongside CodeLlama. While it provided comparable code quality, hardware limitations restricted the use of larger model variants. Higher-capacity models would likely have handled complex challenges even better.

AI Applications in Development:

- Technical Sounding Board – LLMs provided valuable insights when discussing architectural decisions, refactoring strategies, and alternative approaches to implementing key features.

- Reference Implementations – AI models generated example implementations for Vulkan rendering, entity-component systems, and virtual file management, which were then adapted for the project.

- Unit Test Generation – AI-assisted in writing unit tests to validate changes, ensuring refactored code behaved as expected without introducing regressions.

- Error Analysis & Debugging Assistance – While debugging was a manual process, AI tools helped interpret error messages, suggest fixes, and provide explanations for compiler warnings and runtime issues.

By leveraging AI, the project achieved several key advancements that modernized OpenXcom’s architecture and development pipeline. The following list is not an exhaustive list, but highlights the major improvements that have been made:

- Vulkan-Based Rendering Pipeline – A complete overhaul of the rendering system, replacing SDL with Vulkan for better performance, lower latency, and enhanced future compatibility.

- Entity-Component System (ECS) – Implemented a modular ECS to better structure game logic, making it more maintainable and scalable.

- Redesigned Virtual File System – Developed a flexible virtual file system that improves modding capabilities by supporting multiple storage backends seamlessly.

- Enhanced Options System – Introduced a layered options system with support for default, config file, command line, and program override settings, ensuring flexible user configurations.

- Unified CMake Build System – Standardized the build process across platforms, simplifying compilation and dependency management.

- Backward-Compatible Mod Interface – Built a new modding interface that preserves compatibility with existing mods while extending support for future expansions (ongoing development).

- New UI System – Retained the game’s original interface while enhancing scripting control through new features like anchors. These additions allow for more precise positioning and dynamic layout adjustments, making it easier for modders to extend and customize the UI without modifying the core game code.

- New Font System – The font rendering system has been completely re-written, now utilizing the HarfBuzz library to support complex text shaping for languages like Hebrew and Arabic. This system ensures proper glyph positioning and rendering for right-to-left scripts, significantly improving multilingual support. Additionally, the new implementation includes support for ANSI escape codes, allowing for color-coded text output.

- Platform-Specific Overrides – Implemented specialized handling for platform window management and event processing to ensure optimal performance on different operating systems.

- Handle-Based Resource System – Designed a robust resource management system using handles, ensuring efficient allocation and cleanup of assets without direct pointer dependencies.

- Entity Inspector – Allows for a modder to see the entity and object values in-game, as well as edit and modify those values live.

Challenges

Unfamiliar with Discipline

The engineer undertaking this project had no prior experience with modern graphics programming, making Vulkan’s intricate architecture particularly challenging. Unlike traditional graphics APIs, Vulkan requires explicit control over memory management, synchronization, and command buffer execution, all of which introduced a significant learning curve. Integrating Vulkan into the engine required overcoming knowledge gaps in pipeline creation, resource binding, and GPU-accelerated rendering techniques, requiring extensive study and experimentation.

Beyond graphics programming, additional technical hurdles arose in text rendering and internationalization. Proper font kerning, right-to-left text support, and handling multiple languages with complex shaping rules presented unique challenges. AI-assisted tools provided useful references for integrating libraries like HarfBuzz, but they often failed to fully account for the nuances of multilingual rendering. Through trial and refinement, a combination of HarfBuzz and libunibreak was implemented to achieve robust text shaping and word wrapping, though some edge cases still required manual intervention.

Another major challenge was adapting to OpenXcom’s deeply integrated rule-based systems. Unlike many modern game engines that rely on more conventional object-oriented architectures, OpenXcom’s mechanics were built on a highly customized set of rules that dictated game behavior. AI models frequently misinterpreted these structures, leading to incorrect assumptions when refactoring code. Navigating and modernizing these systems required a careful balance of AI-assisted code generation and manual analysis, ensuring that the engine maintained its original functionality while benefiting from the structural improvements introduced by the rewrite.

While AI significantly accelerated the learning process, it did not eliminate the need for domain expertise. Many AI-generated solutions contained errors or were misaligned with the project’s goals, requiring validation through external research and expert consultation. The integration of Vulkan, font rendering systems, and OpenXcom’s rule-based logic was ultimately achieved through a combination of AI-generated insights and human-driven refinement, proving that while AI is a powerful development aid, it still requires an experienced engineer to direct and evaluate its contributions effectively.

AI Hallucinations

AI tools occasionally generated incorrect or non-existent technical solutions, requiring careful verification and debugging to avoid misleading implementations. One of the more persistent issues encountered was AI making incorrect assumptions about the nature of the project. For example, because Vulkan was being used as the rendering backend, AI often assumed that the project involved rendering complex 3D models and suggested solutions optimized for that use case. However, in reality, Vulkan was chosen primarily for its efficient GPU memory transfer capabilities, with rasterization handled at the hardware level for 2D image composition.

This misunderstanding led to frequent misaligned recommendations, such as suggesting unnecessary 3D transformation matrices, complex shader pipelines intended for 3D lighting effects, or over-engineered scene graph solutions. Debugging and refining AI-generated code required significant intervention to align with the project’s actual 2D rendering requirements.

Additionally, AI often proposed function calls and APIs that either didn’t exist or were misapplied, leading to compilation errors or incorrect graphical output. Many suggestions required extensive validation, often necessitating deep dives into official Vulkan documentation or external expert consultation. While AI significantly accelerated coding tasks, its inability to reliably contextualize the specific application of Vulkan in a 2D engine meant that recommendations needed to be treated with caution and cross-checked for relevance before integration.

Misaligned Design Patterns

AI often suggested design patterns that were ineffective or suboptimal for the given problem, favoring widely recognized textbook solutions over more context-aware approaches. When implementing a shared function, an LLM repeatedly replaced a template class with an interface using virtual functions—an approach misaligned with performance goals and unnecessary for the specific use case. This highlighted a recurring issue where AI models prioritized conventional software design paradigms without fully considering constraints such as execution speed, memory overhead, and maintainability.

In performance-sensitive areas, AI-generated solutions frequently introduced excessive abstraction layers, leading to unnecessary complexity and runtime inefficiencies. Additionally, AI models struggled to differentiate between situations that required design flexibility versus those where a minimal, targeted approach was optimal. As a result, significant manual intervention was required to refine AI-generated suggestions, ensuring that design choices aligned with both the project’s technical requirements and long-term maintainability.

Limited Context Extraction

AI struggled to maintain full project context, necessitating frequent copy-pasting of relevant files and code snippets to ensure it had enough information to provide useful responses.

Development was conducted using both Visual Studio and Visual Studio Code, each presenting unique challenges. Visual Studio offered better integration with GitHub Copilot, making in-line suggestions more effective. However, it struggled with external models like CodeLlama and DeepSeek Coder, limiting their usability within the IDE.

Conversely, Visual Studio Code supported a broader range of AI integrations but introduced its own set of issues. Several AI-driven extensions failed to properly parse and insert generated code, occasionally outputting plain English explanations directly into source files instead of valid syntax. This necessitated additional manual intervention, increasing inefficiencies in the development workflow. The inconsistent behavior between these tools underscored the limitations of current AI integrations in maintaining full project awareness and providing seamless assistance across different environments.

Gaps in Domain-Specific Knowledge

While AI was effective for general programming tasks, it struggled with highly specialized topics such as Vulkan intricacies and the mechanics of legacy X-COM systems. This gap in domain-specific expertise often resulted in incorrect or overly simplistic solutions, requiring significant manual research and validation to ensure accuracy. In Vulkan-related implementations, AI-generated suggestions frequently overlooked critical concepts like memory barriers, synchronization, and optimal pipeline configurations. Similarly, when handling X-COM’s original game logic and unique data structures, AI models failed to recognize key architectural decisions from the original codebase, leading to inefficient or incorrect approaches. As a result, external documentation, community knowledge, and direct testing were necessary to bridge these gaps and refine AI-generated implementations.

Reinforcement of Suboptimal Decisions

AI frequently reinforced design choices, even when they were suboptimal or incorrect, making it difficult to rely on its assessments for unbiased architectural decision-making. Instead of critically evaluating a given approach, AI often provided justification for the existing solution, regardless of whether it aligned with best practices or project-specific constraints. This tendency resulted in missed opportunities for optimization and led to situations where flawed implementations were further refined rather than reconsidered. As a result, external validation and manual review were essential to ensure that architectural decisions were based on sound engineering principles rather than AI-generated confirmation bias.

Specific Issues

Palette Handling

The game relies on 8bpp palette images, a format that most modern graphics libraries no longer support. As a result, AI models often struggled to account for this limitation, leading to hard-to-diagnose issues, particularly when handling pointer logic during file loading. Without native palette handling, many AI-generated implementations failed to correctly manage color indexing and transparency, requiring manual correction.

A notable example of this challenge was in font rendering. The game’s fonts are 8bpp and use a limited number of colors, typically six per font. When combined with ANSI escape color codes, proper rendering required a palette structured as 1 + (5 × 16), where color 0 was always transparent. Given these constraints, AI models consistently generated incorrect code that failed to respect palette limitations, requiring significant adjustments to achieve the desired behavior.

Font Handling

Supporting word wrapping across multiple languages presents significant challenges, especially when considering dynamic font thickness, mid-layout font changes, and internationalization. While HarfBuzz provided robust text layout capabilities, AI-generated solutions struggled to produce optimal word wrapping logic that accounted for these complexities.

To address this, libunibreak was integrated alongside HarfBuzz to handle language-specific line breaking rules. Despite this improvement, issues remain in certain languages where AI-generated solutions failed to provide fully compliant implementations. These challenges likely stem from the fact that word wrapping and text shaping are rarely implemented from scratch in modern development, leading to gaps in AI training data. However, ensuring proper internationalization was a priority in the engine rewrite, necessitating continuous refinement to achieve a reliable and flexible solution.

Single Missing Pixel

Pixel accuracy is critical in X-COM’s 320x200 resolution design, where even a single missing pixel can impact the visual integrity of the game. During the implementation of line drawing in Vulkan, an issue was encountered where the lower-right pixel of a rendered box was missing.

Resolving this issue proved to be a significant challenge. Multiple LLMs were consulted to diagnose the problem, but none provided a correct explanation. Instead, their suggestions led to unproductive iterations, often moving further away from the desired solution.

Ultimately, external expertise was required. Consultation with experienced graphics engineers revealed the root cause: a lesser-known rasterization limitation called the Diamond Exit Rule. Once identified, the issue was straightforward to address, but reaching the correct diagnosis required human expertise beyond what AI models could provide.

Results

Key Differences Between OpenXcom and AI-Enabled OpenXcom Engine

The AI-assisted rebuild of OpenXcom’s engine introduced significant improvements in performance, scalability, and maintainability. Frame rates saw a fourfold increase, thanks to Vulkan’s optimized rendering pipeline. The scale of development was substantial, with over 1,800 files modified in just three months—an effort that would typically require a larger team over a longer period. AI-driven tools streamlined workflows, cutting estimated development time by more than a year. The modernized codebase now supports a modular architecture, reducing technical debt and ensuring long-term adaptability for future enhancements, modding support, and cross-platform compatibility.

Performance Gains

Vulkan rendering provided a significant improvement in rendering efficiency, increasing frame rates by approximately 416% over the previous SDL implementation. This optimization was primarily achieved by eliminating the need to transfer image data to the GPU every frame. Instead, all graphical assets are stored in device-local memory, allowing the hardware to handle image copying efficiently through rasterization. By reducing CPU-to-GPU bandwidth requirements, Vulkan enables smoother animations, reduces input latency, and minimizes frame stuttering, ensuring a more responsive and consistent player experience. This shift to a GPU-driven workflow not only improves immediate performance but also lays the foundation for future scalability and graphical enhancements.

Development Scale

A total of 1,822 source files were created or modified over the course of three months by a single engineer. While a high volume of changes does not inherently guarantee quality, this number reflects the extensive scope of improvements made throughout the project. AI-assisted tools enabled rapid iteration and efficient large-scale modifications while maintaining a structured, maintainable codebase. The key benefit was not just speed but also the ability to refactor and modernize the engine without accumulating excessive technical debt, ensuring the long-term sustainability of the project.

Development Speed

The complete engine rewrite was accomplished in just three months, whereas a traditional manual approach was estimated to take 16 to 20 months. AI-assisted tools significantly expedited development by automating repetitive tasks, generating reference implementations, and reducing the time required for debugging and refactoring.

Codebase Modernization

By leveraging AI-assisted refactoring, the project saw a substantial reduction in technical debt. The modernized codebase now features a more modular architecture, improved maintainability, and better long-term scalability. These improvements ensure that future expansions, modifications, and optimizations can be implemented more efficiently without introducing unnecessary complexity.

Security

We demonstrated the use of local models such as CodeLlama and DeepSeek Coder, which provided a significant advantage in terms of security, privacy, and reliability. Unlike cloud-based AI tools, local models ensure that sensitive code and proprietary information remain within a controlled environment, reducing the risk of data leaks or external dependencies. Additionally, running models locally allows for more consistent performance without network latency, making it easier to iterate on code without interruptions. This approach also grants greater customization, enabling fine-tuning of models to better align with the specific needs of the project while maintaining full control over the AI-assisted development workflow.

Cost-Benefits of AI

Leveraging AI-driven tools allowed a single engineer to achieve what would typically require a team of 4-5 engineers, significantly reducing development costs while maintaining high efficiency. The use of AI provided multiple layers of benefits beyond just automation—allowing for rapid prototyping, refactoring assistance, and extensive debugging support, all of which contributed to an accelerated workflow.

One of the biggest advantages was the reduction in time spent on repetitive coding tasks. AI-assisted tools generated boilerplate code, suggested refactoring strategies, and provided reference implementations, allowing the engineer to focus on higher-level architectural decisions rather than being bogged down in low-level implementation details. This approach minimized technical debt while ensuring that code remained modular and maintainable.

Furthermore, AI’s ability to assist with error detection and debugging significantly reduced development bottlenecks. While AI was not a replacement for manual debugging, it provided quick interpretations of error messages, compiler warnings, and potential fixes, allowing issues to be resolved more efficiently than traditional debugging methods.

From a financial standpoint, the ability to accomplish a full engine rewrite with a single engineer drastically cut down on overhead costs. In a conventional development environment, a multi-engineer team with expertise across multiple disciplines, including graphics programming, engine architecture, and tooling, would have been required to handle the workload. The interdisciplinary nature of the project, covering everything from Vulkan integration to UI scripting and internationalization, would typically demand specialized roles. AI-driven assistance enabled a single engineer to bridge these knowledge gaps, rapidly iterating on complex systems and reducing both labor costs and project timelines. This demonstrated the tangible cost-effectiveness of integrating AI into the development process, making large-scale solo development a viable approach for modern game engine overhauls.

Lessons Learned

Challenges with Domain-Specific Knowledge

As AI models continue to evolve, their ability to handle niche technical areas will improve. However, during development, significant time was spent addressing domain-specific knowledge gaps, particularly in areas like Vulkan rendering and pixel-perfect accuracy. While AI provided useful reference implementations, it often required manual validation and correction, slowing progress.

Optimization of Pixel Rendering

A major learning point was the inefficiency in how pixel rendering challenges were approached. The need for absolute accuracy in a 320x200 resolution game meant that even minor discrepancies had noticeable visual impacts. Instead of relying on AI to identify the issue, it became clear that expert knowledge was necessary. Fortunately, industry experts within our network provided key insights, leading to a rapid diagnosis and resolution. This highlighted the importance of recognizing when AI is best used as an assistant rather than as a replacement for domain expertise.

Font Layout and Rendering Requirements

Text rendering, especially for internationalization, presented unexpected hurdles. While HarfBuzz and libunibreak provided the necessary tools for complex script support, early decisions on layout requirements increased development complexity. Reducing initial scope—focusing on foundational rendering first before tackling advanced typography features—could have streamlined implementation while still achieving the project’s primary goals.

Next Steps

- Integrate Large Language Models In-Game – Explore the use of AI to dynamically generate soldier backstories, obituaries, and end-of-month mission summaries, enriching the narrative depth and immersion of the game.

- Enhance Procedural Generation – Expand the procedural generation capabilities to create more varied battlescapes, mission types, and potentially richer lore to further develop the alien presence in the game.

- Leverage AI for Art Generation – Utilize the game as a testbed for AI-driven art generation, including experimenting with 3D model generation and upscaling techniques to enhance visual fidelity while preserving the game’s original aesthetic.

- Expand AI-Driven Optimizations – Continue refining AI behavior in-game to create more intelligent and adaptive enemy and soldier tactics.

- Improve AI-Assisted Modding Support – Develop enhanced AI-assisted UI tools to streamline modding workflows, making it easier for the community to create and customize content.

- Refine Vulkan Rendering – Further optimize Vulkan rendering for cross-platform performance, ensuring seamless compatibility and efficient resource utilization across different hardware configurations.

Conclusion

The successful redevelopment of OpenXcom’s engine by a single engineer in just three months serves as a compelling demonstration of AI-assisted engineering in game development. What would typically require a multi-disciplinary team—spanning graphics programming, engine architecture, UI scripting, and debugging—was made possible through the strategic use of AI tools. Generative AI streamlined many aspects of the project, from generating reference implementations and resolving error messages to refactoring legacy code and expediting complex problem-solving.

Despite the undeniable benefits, this process also underscored the challenges that still exist in AI-assisted development. AI struggled with domain-specific knowledge, often assuming the Vulkan-based project was focused on 3D rendering rather than its actual role as a memory-efficient 2D rendering pipeline. It reinforced suboptimal design choices rather than critically evaluating them and frequently required manual intervention to correct hallucinations and misinterpretations of project architecture. However, these challenges were not insurmountable, and with expert oversight, AI-assisted workflows led to significant time savings and efficiency gains.

This project provides tangible evidence that AI can serve as a force multiplier for developers, enabling them to take on projects of a scale that would otherwise be impractical. Game studios looking to optimize their development pipelines can benefit greatly from AI-driven tooling, reducing labor costs and accelerating iteration cycles without compromising on quality. While AI is not yet a replacement for human expertise, it has proven to be an invaluable asset, reshaping how games are developed.

For developers, studios, and industry professionals alike, the message is clear: AI-assisted engineering is not just a theoretical advantage—it’s a practical reality. The future of game development is one where AI enables smaller teams and even solo developers to build complex, high-quality experiences at a pace that was once unimaginable.

Get Involved

The OpenXcom engine rewrite is an ongoing project, and community feedback is invaluable in refining and expanding its capabilities. Developers, modders, and enthusiasts are encouraged to explore the repository, experiment with the new engine, and contribute by reporting issues or suggesting improvements. If you’re interested in testing, modding, or further enhancing the project, visit the GitHub repository at OpenXcom on GitHub and join the discussion. Your insights and contributions can help shape the future of this AI-assisted game engine!